Amazon Aurora Serverless: A Love–Hate Relationship

As mentioned in a previous post, we migrated to Amazon Aurora Serverless in mid-June of last year. In this post, I will be discussing what Aurora Serverless is, why we used it, and why we migrated off of it.

What is Aurora Serverless?

As AWS describes, Aurora Serverless is an "on-demand, auto-scaling configuration for Amazon Aurora (MySQL-compatible and PostgreSQL-compatible editions), where the database will automatically start up, shut down, and scale capacity up or down based on your application's needs." The promise is that it will take care of managing scaling your database as your load increases/decreases on-demand. Compared to regular RDS, you would have to manage your capacity manually based on your anticipated demand. That means either you will be over-provisioning your database servers and bear the cost or under-provisioning and risking the outage.

One other nice feature of Aurora Serverless is that it does not require you to declare your primary and read-replicas in your application. It uses a single endpoint given that it is a single instance switched on-demand to a larger/smaller size.

How does it work?

As detailed in this page, it works by having a warm pool of instances and a fleet of proxies in front of them. When scaling is triggered, the proxy will hold the new connections while the engine switches to the new instance. And if you had the Force the capacity change options enabled, the proxy will drop connections preventing the scaling.

When scaling is triggered, Aurora tries to find a scaling point, where there are no queries or locks preventing it from switching instances. If the Force the capacity change option is enabled, it will kill queries and scaling the capacity after a timeout period (5 mins). Notice that no new queries will be accepted until the scaling is complete, so we had to enable that option because we would rather lose some queries than uptime. An outage at Zid means lost transactions, which translates to mad merchants.

Why we chose it?

The appeal was that Aurora Serverless would hide all the database capacity management complexity the same way that RDS does with database provisioning. Also, the applications would not need to know about replicas and their replication latency – compared to our previous setup. It was a perfect solution, and scaling was seamless based on our testing with non-production environments.

Where did it become ugly?

Moving to production, we found where serverless fails to deliver its promises—given the spiky nature of our traffic that is triggered by sudden merchant campaigns. We found that as discussed earlier, "serverless" is, in reality, very "serverful". The scaling process involves switching traffic from one instance to another, and, more importantly, replicating the data between instances (Amazon does not explain how this works).

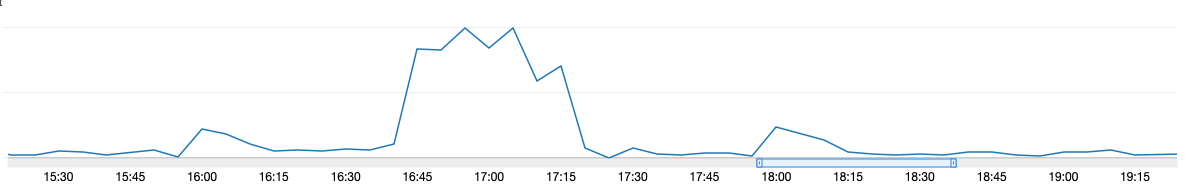

One recent incident was caused by the merchants racing to take advantage of the COVID-19 curfew and the push to online shopping. Look at the connections spike in the following graph.

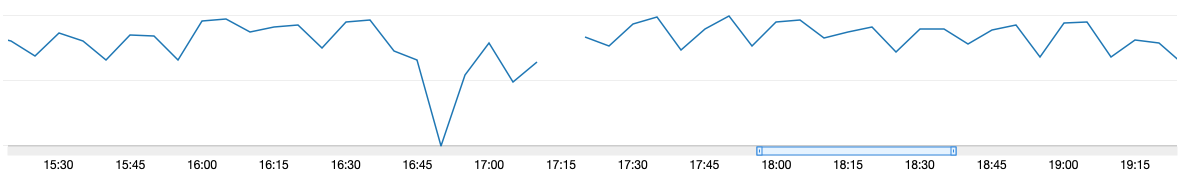

And the following corresponding queries graph.

Notice the gap that is ten full minutes of zero queries executed. And yes, we were able to mitigate the severity of this using caches. The site was mostly browsable, but orders could not be placed.

Aside from potential outages, we had other concerns, like monitoring, which is where "serverless" is actually "serverless." Because there is no actual instance for the database, the performance insights option in RDS cannot be enabled, which means that you are flying blind outside of MySQL's logs. This issue made us struggle in trying to understand our performance characteristics around the database. We have good visibly in our applications, cache clusters, and Kubernetes. But the database is a black box in that regard.

Another issue is that we cannot make read replicas to be used in analytical jobs or to prevent a full outage in case the master went down – Aurora has an interesting variation of this called cloning that utilizes copy-on-write protocol.

We were also concerned that we might run out of room to scale as we were frequently hitting the ceiling of what Aurora Serverless can offer; that is 256 Units, which has 488GB of RAM. Amazon did promise us to provide larger instances but did not give any expected date nor a rough timeline.

Moving forward

Aurora Serverless served us well during the first couple of months. It allowed us to focus on tuning the other pieces of our infrastructure. And we will still use it in less spiky workloads like testing databases and other internal tools. As for our production setup, we have moved to Amazon Aurora (non-serverless), given the competitive pricing (it is actually cheaper than serverless), and overall better performance over RDS MySQL. We started with a simple single-writer multi-readers architecture, and we will soon be experimenting with cross-region replicas to bring data closer to our customers.